The risks, impact, and benefits of using AI generated content

In software development and security, AI can involve automating code generation, identifying vulnerabilities and detecting potential threats.

In software development and security, AI can involve automating code generation, identifying vulnerabilities and detecting potential threats.

Artificial Intelligence (AI) has had a significant impact on the world over the last year. Many individuals, companies, and (unsurprisingly) journalists have pushed new developments such as ‘ChatGPT’ to the forefront of people’s minds.

Discussions are happening around the world with differing mentalities of how the use of AI in the modern day can either be a benefit or a detriment.

As AI technology continues to evolve, it offers new opportunities for developers and security professionals such as those within CovertSwarm to improve their work and stay ahead of emerging threats. In this article, we will explore the impact of AI generation for developers and security professionals and discuss how we believe these roles can benefit from this sort of technology.

We will also cover best practices for adopting AI responsibly and look at real-world examples of AI generation in action and how we’ve incorporated this modern-day marvel into the CovertSwarm Offensive Operations Center.

The use of AI in a development role offers several benefits. For example, AI- generated code can help speed up the development process, allowing developers to focus on more complex tasks. Additionally, AI algorithms can identify potential errors or inefficiencies and suggest improvements to code, leading to higher quality software before going through the QA process (or worse, ending up in production).

Another advantage for developers is that AI can improve the accessibility of coding. With AI-generated code, developers with limited coding experience can quickly generate code snippets that meet their requirements and with less concern on the syntax barriers needing to be overcome to embrace new languages.

For example, services such as GitHub’s Co-Pilot uses the OpenAI models (via Microsoft), which generates code based upon natural language input. It can help developers accelerate the coding process and generate code snippets more efficiently.

However, there are also potential challenges associated with the use of AI for developers. One concern is the risk of relying too heavily on AI-generated code, which can result in code that is difficult to maintain and debug, or the code being subject to bugs and errors.

Additionally, there is a risk of the AI-generated code being overly generic and not meeting the specific needs of the project.

A more concerning aspect is the use of code that breaches licensing. Many models are trained using open-source software, with some (such as GitHub Co-Pilot) using private repositories if this is enabled by the end user or organization.

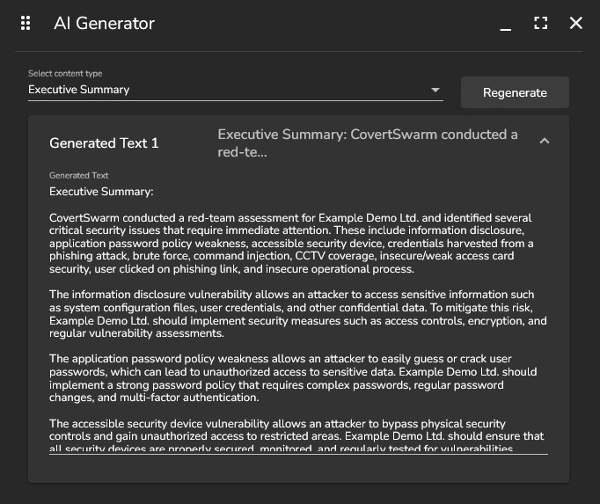

Have you ever wondered how cybersecurity experts generate reports on potential vulnerabilities in a system or application? Traditionally, these reports were created manually by experienced security professionals, which could be time-consuming and prone to errors. But now, with the rise of artificial intelligence (AI), vulnerability reporting and penetration testing reports can be generated much more efficiently.

AI is being used to automate the process of generating these reports. Using machine learning algorithms, AI can analyze large amounts of data and create reports that are accurate and comprehensive in a fraction of the time it would take a security professional. This frees up security professionals to focus on more complex (and value-driving) tasks like analyzing the results of the tests and developing solutions to address any discovered vulnerabilities.

One of the benefits of using AI in vulnerability reporting and penetration testing is that it can identify potential vulnerabilities that may have been missed by security professionals. AI can analyze vast amounts of data and identify patterns and anomalies that may be overlooked by humans, which means that vulnerabilities can be identified and addressed more quickly, reducing the risk of a security breach.

However, it is important to remember that AI is not a replacement for security professionals. Security professionals are still needed to guide activities and review and validate the results of the resulting AI-generated reports. Additionally, the AI algorithms used must be well-trained and up to date to ensure accurate results, and for natural language processing the prompts used must also be maintained and regularly audited to ensure the accuracy and security of the data that is inputted to the model(s).

However, there are also challenges associated with AI for security professionals. One concern is there is a risk of overreliance on AI-generated security controls, which can result in a false sense of security if there are false positives or negatives.

Overall, AI is a valuable tool for vulnerability reporting and penetration testing. By automating some aspects of a process, AI can help cybersecurity professionals work more efficiently and identify potential vulnerabilities more quickly or provide the resulting output in a reduced amount of time. While it is not a silver bullet, AI can definitely be a helpful tool.

While AI generation offers many benefits, there are also significant risks associated with online-based AI generation, particularly when it comes to potentially sensitive information being sent to the service.

When using online AI generation services, users may be required to upload data that contains potentially sensitive information, such as client data or proprietary code. This data may be processed on third-party servers, which may be subject to data breaches or other security vulnerabilities.

Additionally, there is a risk that an online AI content generation service may not be secure, and the data may be intercepted by hackers or other malicious actors. This could result in the theft or exposure of sensitive information, which could have severe consequences for individuals or companies.

To mitigate these risks, it is essential to take steps to ensure the security and privacy of data when using online AI generation services. This may include using encryption to protect data in transit and at rest, ensuring that the online service has adequate security measures in place, and carefully reviewing the terms of service and privacy policy before using the service.

It is also important to consider whether online AI content generation is necessary for a particular task or whether there are alternative solutions that do not require the transmission of potentially sensitive data. By carefully evaluating the risks and benefits of online AI generation and taking steps to mitigate those risks, individuals and companies can use this technology safely and effectively.

To make the most of AI generation, developers and security professionals should follow best practices that ensure the technology is used effectively and ethically. Some of these best practices include:

There are several real-world examples of AI generation in action that demonstrate its potential benefits for developers and security professionals.

For example, Google’s AutoML project uses machine learning algorithms to automate the process of training neural networks. This allows developers to build custom AI models without requiring a deep understanding of machine learning.

In the field of security, companies like Darktrace claim to use AI algorithms to detect and respond to potential threats in real-time. Darktrace’s technology uses unsupervised machine learning to analyze network traffic and identify anomalous behavior that may indicate a potential security breach.

Here at CovertSwarm we have implemented AI generation to initially assist our Swarm of ethical hackers to both improve efficiency and quality of the content we provide to clients. As a security company our main concern is of course the confidentiality of the data we use to interact with the AI model services, and so each and every content prompt is stripped of any potentially identifiable information.

This does mean that the generated content does not include full contextual information, but our expert teams use these AI-drafted responses to cut down on the time spent reporting to ensure that more time is spent on identifying and discovering potential security risks to our clients.

AI generation offers significant benefits for many different roles. In this article we have focused upon developers and security professionals, and automating routine tasks to improve threat detection and response. However, it is essential to adopt AI responsibly and to understand its limitations to ensure that it enhances rather than replaces human judgment and creativity.

By following best practices surrounding security and privacy and staying up to date with the latest developments in AI technology, developers and security professionals can leverage AI generation to improve their work and stay ahead of emerging threats.

And of course, where would we be if a large part of this blog post weren’t written with the help of AI…though this was carefully reviewed and adjusted by a human.

AI apps are smart. Until they do something really dumb.

AI apps seem brilliant—until they expose secrets or spill user data without a clue. Behind the curtain? Chaos. Hackers, take aim.

CovertSwarm Ranks #23 on Clutch 100 Fastest-Growing Companies in 2025

Clutch has recognized us for achieving one of the highest revenue growth rates from 2023 to 2024.

CovertSwarm Achieves Prestigious CBEST Accreditation

CovertSwarm becomes one of the few cybersecurity firms accredited under the Bank of England’s rigorous CBEST framework.